Reprogrammable FPGAs help customers improve performance, power and time to market

While improvements in processors and software solutions have catalyzed major advances in High Performance Computing (HPC) systems, the need for customizable performance is an ever-present challenge for those researchers, academic institutions, and corporations relying on supercomputers every day. The latest generation of HPC-optimized processors have delivered major advances in supercomputing performance. Each processor is optimized for its own niche in HPC. For example, with excellent multi-threading capability and a large partner ecosystem, Intel Xeon processors are ideal for servers running diverse workloads, storage, networking, enterprise servers, and more. Intel Xeon Phi processors, on the other hand, are architected for highly parallel and heavily threaded applications.

While Moore’s Law continues to define how each new generation of processor will exceed its predecessor’s performance, engineers are simultaneously exploring other ways to accelerate workloads through the processors available today. Altera took on this challenge through refinement of Field Programmable Gate Array (FPGA) devices. FPGAs can be programmed and customized by the end user to accelerate many HPC workloads.

In December 2015, Intel acquired Altera, and the combined technological expertise of their teams has led to solutions which are helping customers achieve new levels of performance. In the field, FPGAs have proven their ability to more than double the speed of HPC systems(1), on targeted applications, compared to utilizing an Intel Xeon or Intel Xeon Phi processor alone. For those organizations seeking to add every ounce of performance to their existing supercomputing systems, that’s a huge benefit.

Increasing performance, reducing energy consumption

The idea of offloading some of the workload from the main processor to a co-processor is not new. This technique has generated some very effective performance improvements for supercomputers in service today. However, the downside of a standard co-processor is its limited adaptability. A co-processor can be designed as a very effective accelerator for a particular workload, but if that same co-processor is dropped into a different workload scenario – one it is not specifically designed to accelerate – it may not deliver the goods.

In contrast, a FPGA is rather chameleon-like. It has the innate ability to be configured in unique ways to address the needs of a particular workload it is programmed to assist. Think of it this way: In a child’s Lego building set, a huge number of specialized pieces are at the ready to be combined and used in whatever ways she chooses. Bricks, windows, wheels, and flat plates can be combined in to create a miniature city today, then re-combined next week to create a toy spaceship. Extending this analogy illustrates the extremely flexible, customizable, and functional nature of FPGAs. An individual FPGA packs onto its surface millions of configurable components including logic elements, DSP blocks, high speed transceivers, memory blocks, memory controllers and more. The sum of these parts give FPGAs a big advantage over conventional GPUs and CPUs, sporting up to 100 times the number of individual computational units.

With all these capabilities on tap, a “blank slate” FPGA can be programmed to utilize any or all of those configurable elements, optimizing the necessary pieces for a very specific workload. An FPGA can be programmed to serve its owner as something relatively simple like additional high-speed memory, or it can take on much more significant responsibilities. Software can define the FPGA for high speed capabilities as a storage controller, network adapter, a pre- or post-processor, or to run single precision floating point operations for intensive parallel computing applications.

Another major benefit of FPGA offload is energy efficiency in the larger supercomputing environment. FPGAs can reduce power consumption of an HPC system in two ways. First, programmed FPGAs can take on tasks delegated from the Intel Xeon or Intel Xeon Phi processor, to optimize the workload for system computational performance. Secondly, because the FPGA’s role is defined by software, only the needed onboard elements of the FPGA are activated. The rest of the FPGA’s configurable elements can remain dormant unless called into service by alternate programming.

As Ian Land, Intel’s data center senior product marketing manager, (formerly of Altera) describes this benefit, “With FPGA designs, we originally set out to help customers get the maximum computing power per watt of energy. Having an FPGA in an HPC system is a little like having a high performance car engine which can also operate as an electric hybrid. All of a gasoline engine’s power is there when it’s really required, but in lower-demand scenarios, a much more efficient system can take over to save fuel.”

Power where you need it

According to Intel, FPGA programming can be done by qualified onsite experts with skills in languages like OpenCL, C/C++, and Fortran. Intel can also partner with them to develop code best optimized for their particular workload. Land adds, “There is certainly a learning curve associated with FPGA programming, so Intel is helping customers with more tools to make the process easier, especially simplifying the effort for application developers who are comfortable with the Intel Architecture.”

Once the appropriate optimizations are coded, an FPGA can be configured permanently for a specific role as part of a supercomputing system, or that same FPGA can be programmed again and again to serve multiple purposes. If we take the hypothetical example of a university research lab, one department might require an HPC system to process enormous amounts of weather data in order to model long-term weather patterns. Another department at the same university may need the same supercomputing system for mapping the human genome. In a scenario like this, an FPGA can dramatically streamline the process of changeover from one research team to another. In a matter of milliseconds, software can be uploaded to the FPGA, optimizing it for one research department’s required parallel computing scenario. At any time in the future, new software can be uploaded within milliseconds, configuring the FPGA another department. Because of this extreme, on-the-fly flexibility, FPGAs can help researchers save significant amounts of HPC configuration time, allowing them to focus on their research project results – not tweaking the supercomputer that will deliver them. FPGAs can also save a university money since hardware components do not need to be changed out, and there’s no need for a redundant HPC system need to be configured for another department’s unique requirements.

The security industry represents another example sector benefitting greatly from programmable FPGAs. In their business, constantly-changing security information, ensuing threat analysis, encryption, decryption, and security mechanisms like firewalls demand mission-critical speed and customization options. Milliseconds count. With an FPGA at work accelerating computing systems, security experts can more quickly and effectively manage day-to-day security needs as well as potential emergent threats.

Is an FPGA right for me?

While this advanced technology has the capacity to generate significant improvements for many HPC applications, FPGA is unfortunately not the one-stop solution for every scenario. Mark Kachmarek, HPC Platform Marketing Manager at Intel suggests, “FPGAs can be designed to accelerate a range of different HPC workloads. While it has made a big difference for many customer scenarios, we’ve found it especially helpful for workloads where single precision and low-wattage performance acceleration is a customer consideration. In contrast, an Intel Xeon Phi processor, with its many integrated core architecture, show excellent performance of highly parallel computing workloads, such as simulation, molecular dynamics, weather, etc.”

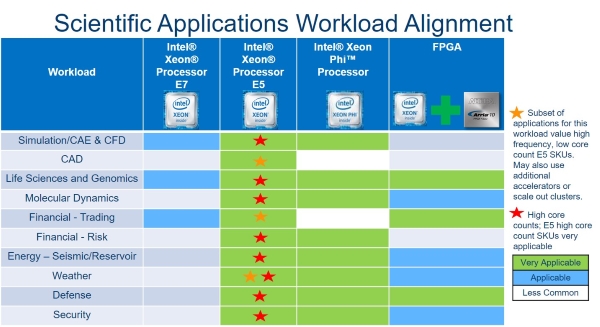

The chart below describes common scientific applications workload scenarios, and the processor configurations that will offer the greatest level of performance boost for that particular workload. There are similar workload scenarios for other applications, such as business processing, analytics, cloud services, visualization, audio, communications and storage.

A bright future

While today’s advanced supercomputing systems exceed performance levels only dreamed about a decade ago, we’ve come a long way in achieving the speed and efficiency to tackle some of the most daunting problems facing scientists, researchers, and corporations today. Advancements in Intel Xeon and Xeon Phi processors have made major leaps forward in their own right, and the innovation of FPGA technology represents another significant advancement improving computing performance for supercomputers.

If the supercomputers your organization depends on could benefit from a turbo charge, it’s well worth exploring whether if your workloads could benefit from FPGAs. In many scenarios, FPGAs offer their HPC architects and end-users immediate performance benefits, unique and rapid customization options, plus a reduction in energy consumption that can offset the initial capital investment.

(1) https://www.microsoft.com/en-us/research/wp-content/uploads/2016/02/Catapult_ISCA_2014.pdf

Rob Johnson spent much of his professional career consulting for a Fortune 25 technology company. Currently, Rob owns Fine Tuning, LLC, a strategic marketing and communications consulting company based in Portland, Oregon. As a technology, audio, and gadget enthusiast his entire life, Rob also writes for TONEAudio Magazine, reviewing high-end home audio equipment.

More around this topic...

© HPC Today 2024 - All rights reserved.

Thank you for reading HPC Today.