In sync

Given the specificities of both architectures, we had to adopt an appropriate testing approach. In the real applicative world, memory access and compute operations are often executed in parallel, where hiding memory access latency is traditionally achieved by using several parallel contexts (active warps or hyper-threads). So we measured performance using wall-clock execution times that equal the maximum compute + memory access execution durations. Depending on which resource was the bottleneck, we classified problems as compute-bound (calculation time > memory access time) or memory-bound (memory access time > calculation time).

However, the duality is complicated by a third degree. Since Intel’s 80386, memory and CPU are not synchronized anymore, which leads to some “natural” latency in memory access. This explains why so many codes spend more time waiting for data to become available than in compute operations – a problem that also impacts memory bandwidth (see Little’s law). In such cases, the algorithms at play are neither memory-bound nor compute-bound. We therefore classified them as latency-bound.

Accordingly, we present three classes of problems – one for each category – in several implementations adapted to each of the two hardware platforms. The first problem is memory-bound: we read a large array of floating-point values and sum them up. The second problem is compute-bound: we compose a function a certain number times for which we know how many floating-point operations will be used. The third problem is latency-bound: we access a small amount of data, process it and store a smaller number of values. This code is neither compute-bound nor memory-bound.

Memory or Compute?

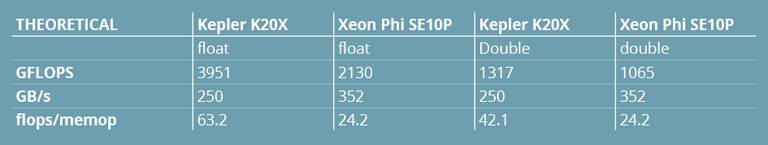

Before going further, let’s take a look at the hardware capabilities to assess a theoretical boundary between compute- and memory-bound. The latency-bound configuration most often appears on smaller problem sizes, where latency cannot be hidden by other kinds of processing. Table 3, based on the vendors’ specs, summarizes these capabilities and the number of FP operations that need be performed for a given problem to enter the compute- or memory-bound area.

Table 3 – Flops / memops ratios necessary to qualify a problem as compute-bound (based on the vendors’ specifications).

What the table shows primarily is that we need 24 to 68 times more floating-point operations than memory operations to be compute-bound. This will come as no surprise to HPC programmers, who know that most problems are generally memory related. After all, adding two vectors into a third one amounts to one compute operation and three memory operations. That is why the Gflops specification should not be the preferred performance metrics when choosing a parallel accelerator. We will propose a similar table with measured performance indicators at the end of our evaluation (see Table 6 at the end of the article).

More around this topic...

In the same section

© HPC Today 2024 - All rights reserved.

Thank you for reading HPC Today.