We just attended Jensen Huang keynote and the least we can say is that the CEO and founder of the company was pretty excited about the insights we shared with us. The show lasted for more than two hours with lots of tremendous announcements in all the areas where NVIDIA is present.

Huang started his presentation by reminding everybody how successful the GTC has been all over the years. In the last 5 years, the number of attendees has been up by 3x while the number of GPU developers had a 5 times increase.

Project Holodeck

After this short introduction, the first announcement was the Project Holodeck, instead of a long explanation Huang started right away with a demonstration of the concept. Even if it did not started really well we discovered a technology mixing VR, avatars and some motion tracking. So far the concept looks interesting and early access is expected to be available in September.

The rise of machine learning

The next point of the demo was about what Jensen Huang called “one of the most important revolutions ever” which is machine learning and the fact that computers are now learning by themselves with algorithms writing algorithms and programs writing programs. In this area one of the latest innovations is adversarial networks. Two network are setup and given opposite tasks so that one is trying to fool the other while the other tries not to be fooled. The synthesis of this two trainings gives terrific results as each network reinforce the behavior expected by the other one. Also at the end of his point on the Era of machine learning, Huang concluded by telling us that today the most popular course at Stanford is Introduction to Machine Learning.

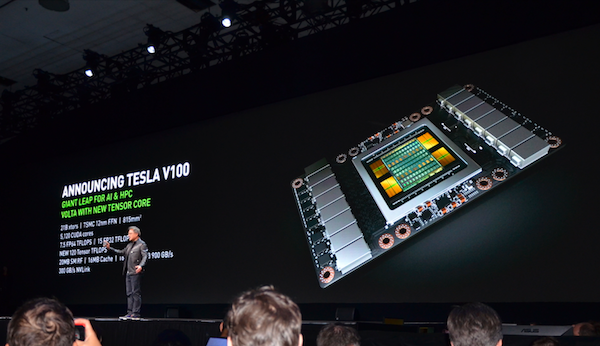

More GPU performance for a better AI

The increase of GPU performance has allowed to reach a new level in the complexity of AI. For Huang it’s now time to talk about a new chapter in computing. According to him it is the most complex project that has ever been undertaken by the company at a cost of 3 billion dollars and it comes under the name of Tesla V100.This new processor brings a 50% performance increase on general operation compared to Pascal and an impressive 1200% increase when it comes to Deep Learning training. Various demos showed us how what took a few minutes on Titan X takes only a few seconds on Volta.

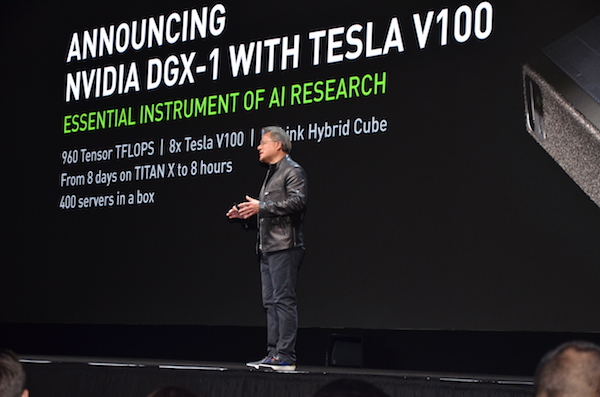

DGX-1 gets updated and a new form factor

The Tesla V100 is also an occasion to update the famous DGX-1 which will now be know as the DGX-1V. The system will come with 8 Tesla V100s in an NVLink Hybrid Cube arrangement. Orders are starting today at a price of $149,000. The performance are now at 960 Tensor TFLOPS with Jen-Hsun lamenting that it doesn’t have another 40 TFLOPS to became a 1 PFLOP setup. Deliveries are expected in Q3 and Volta will be available for OEMs during Q4. Also, all DGX-1 orders will now be upgraded to DGX-1V.

But there is more. The DGX-1 will be available under a new form designed for engineers that doesn’t have server setups. Under the name of DGX Station they will be able to purchase a personal DGX with 4x Tesla V100, and water cooled to be able to work silently. The price is set at $69,000. Finally, last product to be announced is the HGX-1. A server for cloud computing with 8 Tesla V100 in a hybrid cube.

Focus on Deep Learning inferencing

Huang also announced the availability of TensorRt for TensorFlow, a compiler for deep learning inferencing with improved performances. With it also comes the Tesla V100 for Hyperscale Inference a 150W full height half-length PCIe card. It’s interesting to notice that Nvidia is pitching Tesla V100 for inferencing when with Pascal, P100 was for training and other products like P40 were for inferencing

Not forgetting about the Cloud

With NVIDIA GPU Cloud, Nvidia wants to provide a complete GPU deep learning platform “containerized” with virtual machines pre-configured for the task. NVIDIA isn’t exactly building their own cloud but providing the software and pointing the customer to the cloud services that can run it. Nvidia GPU Cloud will support numerous permutations of software stack versions and GPUs

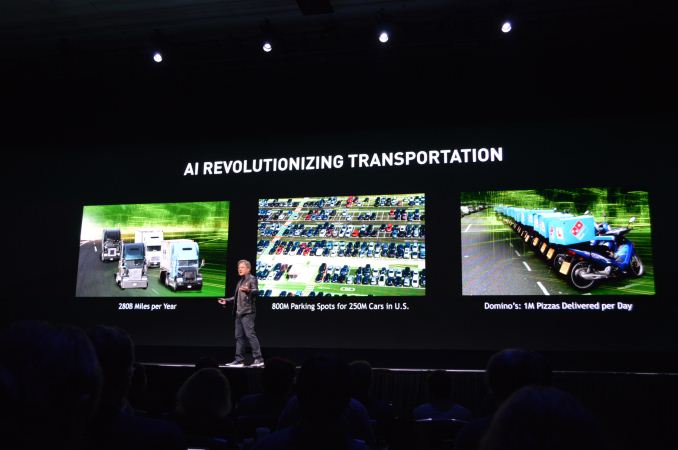

AI for the everyday life

Autonomous cars are definitely an area where AI will have a great part to play. Nvidia is now a provider of a complete solution, the NV Drive PX, that can be used by cars manufacturers. This partners will be able to adapt this system to their products.

But the great announcement in this area is that Toyota has selected NV Drive PX platform for their autonomous vehicles.

This part of the keynote was also the occasion to discuss Xavier SoC. Previously announced in 2016, it will now be using a Volta-architecture GPU combining a CPU for single-threading, a GPU for parallel work, and Tensor cores for deep learning. NVIDIA is going to open source Xavier’s deep learning accelerator

Robots to end the show

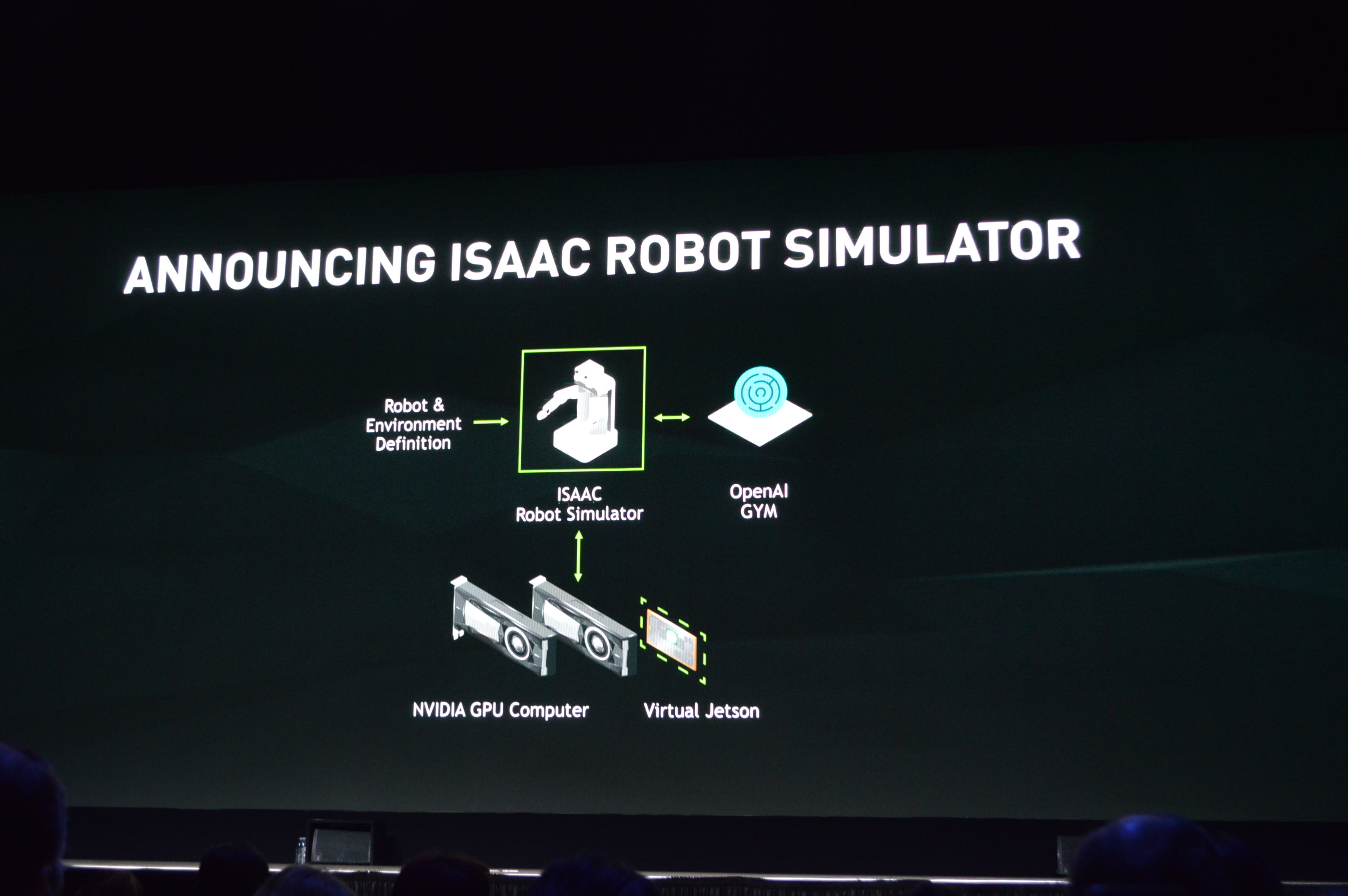

The last point of this keynote was about robots and how deep learning can be used to train them. Huang Announced the Isaac robot simulator in reference to both Asimov and Newton.Nvidia idea is to train a robot for the physical world in a virtual world. Then one GPU is used to do physics calculations, and another to doe the neural network training based on the results of the first one. The demo showed us a robot being taught to play hockey and golf and the possibility to multiply the trainings in a virtual world in order to select the best AI to implement in a real world robot.

That was the end of the keynote and we will still need a little time to digest all the information we’ve been provided during those two hours. We’ll come back with more detailed information soon.

© HPC Today 2024 - All rights reserved.

Thank you for reading HPC Today.