This article is part of our feature story: How CERN manages its data

To meet its persistence and access needs, CERN has developed its own hierarchical storage management system – CASTOR (CERN Advanced STORage manager). The initial idea was to enable researchers from around the world to register, list and retrieve files from the command line or application tools using a unique dedicated API. The basic principle of CASTOR is that files are always accessed from the cache drives. It is the back-end of the system that manages their conservation on tape. To help researchers use their existing codes, multiple access protocols are available: RFIO, ROOT, XROOT and GridFTP.

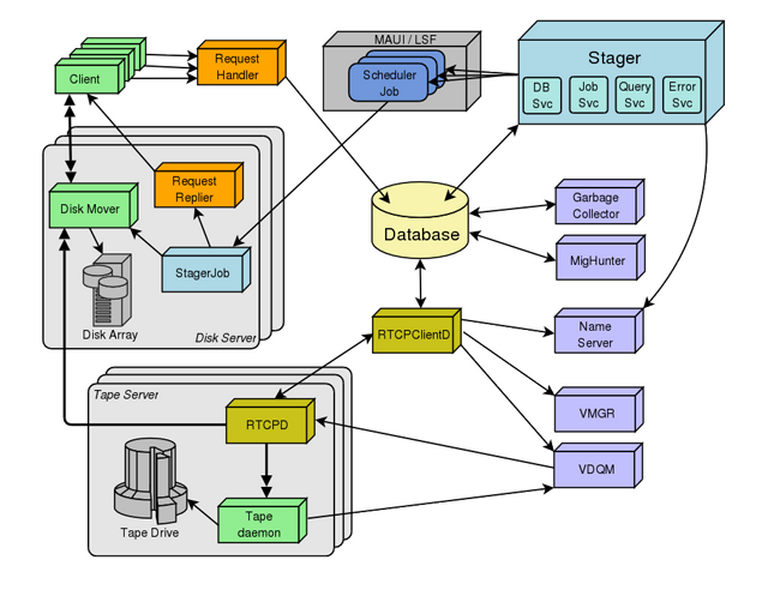

Fig. 1 – Architecture of the CASTOR system. The role being played by Oracle DBMSes is central. The stager is the port of entry. (CERN Document)

At the heart of the CASTOR architecture, a central database backs up the components status changes. Grosso modo, this architecture is based on five functional modules:

• a stager allocates and reclaims storage space, controls client access and manages the catalogue of local disc clusters.

• a name server (files and folders) includes files metadata (size, dates, checksums, owners, tape copy information). Management of the namespace is based on command line utilities derived from the Unix model.

• the tape infrastructure provides for the copying of files under certain circumstances (e.g. to secure the data) and for the storage of files that are larger than the immediately available disc capacity.

• a client software allows the user to upload and download data files and to manage CASTOR data.

• the Storage Resources Management System gives access to data in a grid environment via its own protocol. It interacts with CASTOR on behalf of a user or of other services such as FTS (the file transfer system used by the LHC community to export data).

To maximize storage efficiency, CASTOR prioritizes large files (of 1 GB minimum). For most small and/or non-experimental containers, users are invited to use AFS, a more conventional file distribution system. Typically, a file stored via CASTOR will be taped in less than 24 hours (8 hours for experimental physics data). Disc instances are then deleted asynchronously.

The duration of reloading data from a tape may vary according to the system’s current workload but the average time is about 4 hours. Applications requiring this data will wait from the time of the query until the successful completion of the download, after which they’ll start automatically. However, preparation requests can be sent for data to be loaded onto disc in advance. In addition to these procedures, groups of researchers / experiments have an SLA assigned to them that defines precise storage strategies with regard to the work being carried out.

More around this topic...

© HPC Today 2024 - All rights reserved.

Thank you for reading HPC Today.